背景

微调过程中的各种数据集清洗、微调超参数调整学习,最新模型的测试,都需要一个高效的微调框架去进行验证、熟悉,本文就通过介绍苹果官方出品的MLX微调框架本地微调大模型。毕竟想随心所欲的使用云端微调服务,大部分都是需要收费,在没有对微调技术较为熟悉的情况下,本地微调大模型也是不失为一种ROI较高的学习方式。

微调

微调是基于一个已经训练好的神经网络模型,通过对其参数进行细微调整,使其更好地适应特定的任务或数据。通过在新的小规模数据集上继续训练模型的部分或全部层,模型能够在保留原有知识的基础上,针对新任务进行优化,从而提升在特定领域的表现。 根据微调参数范围划分,微调范围分为两种:

- 全微调(Full Fine-tuning):就是对整个预训练模型来个全套改造,包括所有的模型参数。这种招式适合任务和预训练模型之间相差大的情况,或者任务要求模型超级灵活自适应的时候。虽然这招消耗资源多,时间也长,但效果杠杠的。

- 部分微调(Partial Fine-Tuning):这个招式就是只调整模型的上层或者少数几层,底层参数不动。这招适合任务和预训练模型比较相似,或者数据集不大的情况。因为只动少数层,所以资源消耗少,速度快,不过有时候效果可能差点。

目前我们绝大部分场景都使用的是部分微调,种方法减少了计算和存储成本,同时降低了过拟合的风险,适合数据较少的任务,但在任务复杂度较高时可能无法充分发挥模型的潜力。

根据微调使用的数据集类型,大模型微调还可以分为:

- 监督微调(Supervised Fine-tuning):就是用有标签的训练数据集进行微调。这些标签告诉模型在微调中应该怎么做。比如分类任务,每个样本都有对应的标签。用这些标签指导模型微调,可以让它更适应具体任务。

- 无监督微调(Unsupervised Fine-tuning):这个就是用无标签的训练数据集进行微调。也就是模型只能看数据,不知道啥是对啥是错。这种方法通过学习数据内在结构或者生成数据,来提取有用特征或者改善模型表示能力。 本文后续微调采用的是SFT的方式进行微调,微调的理论我就不做过多篇幅介绍,有兴趣的同学可以自行搜索资料,本文重点是希望通过实操的方式,让大家对大模型的微调有亲身操作的体感,从而激发大家对于模型微调的研究兴趣。

好处

- 提高准确性:微调可以显着提高大模型在特定任务上的准确性。

- 提高效率:可以使大模型在特定任务.上更有效。例如,在对问答数据集进行微调后,大模型可能能够更快、更准确地回答问题。

- 提高泛化能力:可以提高大模型的泛化能力,这意味着它们可以更好地执行与训练数据中数据不同的任务。例如,在对不同类型的创意文本格式(如诗歌、代码、脚本、音乐作品、电子邮件、信件等)的数据集进行微调后,大模型可能能够生成我们用看件下微调大模型的优可以减少训练大模型所需的数据量。

- 数据安全:如果数据敏感,内部组织可能更愿意使用微调而不是公开模型,以保护数据隐私。

流程

- 准备数据集:找到和任务相关的数据,保证数据质量和标签准确,然后做好清洗和预处理。

- 选模型:根据任务和数据,选一个合适的微调的基座模型。

- 微调策略参数:根据任务需求和资源,选择合适的微调策略,配置LoRA参数、微调参数如学习率,确保模型收敛。

- 训练模型:在训练集上训练模型,,按照设定的超参数和优化算法,调整参数降低损失,防止过拟合。

- 评估模型:在验证集上评估模型性能。

MLX

MLX是由苹果的机器学习研究团队推出的用于机器学习的阵列框架,该开源框架专为 Apple Silicon 芯片而设计优化,从NumPy、PyTorch、Jax和ArrayFire等框架中吸取灵感,提供简单友好的使用方法,它可以在Apple Silicon CPU/GPU 上进行 ML 训练和推理。由Apple公司开发的 MLX 库类似于 TensorFlow 和 PyTorch,支持 GPU 支持的任务。该库允许在新的 Apple Silicon(M 系列)芯片上对 LLM 进行微调。此外,MLX 还支持使用 LoRA 、QLoRA等方法对 LLM 进行微调

官网:ml-explore.github.io/mlx/build/h… github:github.com/ml-explore/…

下载模型

通过命令行下载模型,模型大小1G左右,下载命令如下,整体过程可以跑满下载带宽。

创建环境

conda create -n FineTune python=3.11.11

conda activate FineTune

下载模型

#安装依赖 可能需要科学

pip install -U huggingface_hub

#设置环境变量

export HF_ENDPOINT=https://hf-mirror.com

#下载模型,保存至qwen2.5-0.5B目录

huggingface-cli download --resume-download Qwen/Qwen2.5-0.5B-Instruct --local-dir qwen2.5-0.5B

下载失败可以重复执行一下

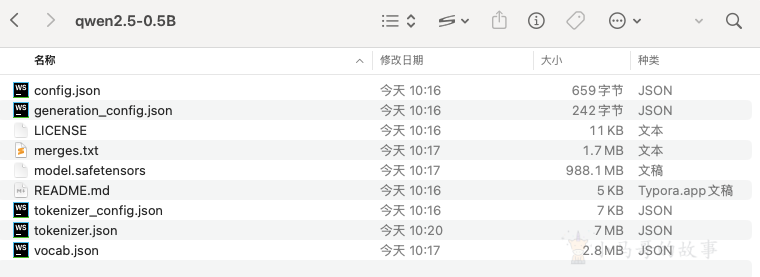

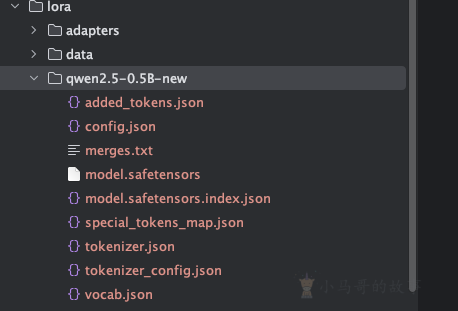

下载完成后的文件列表

准备数据集

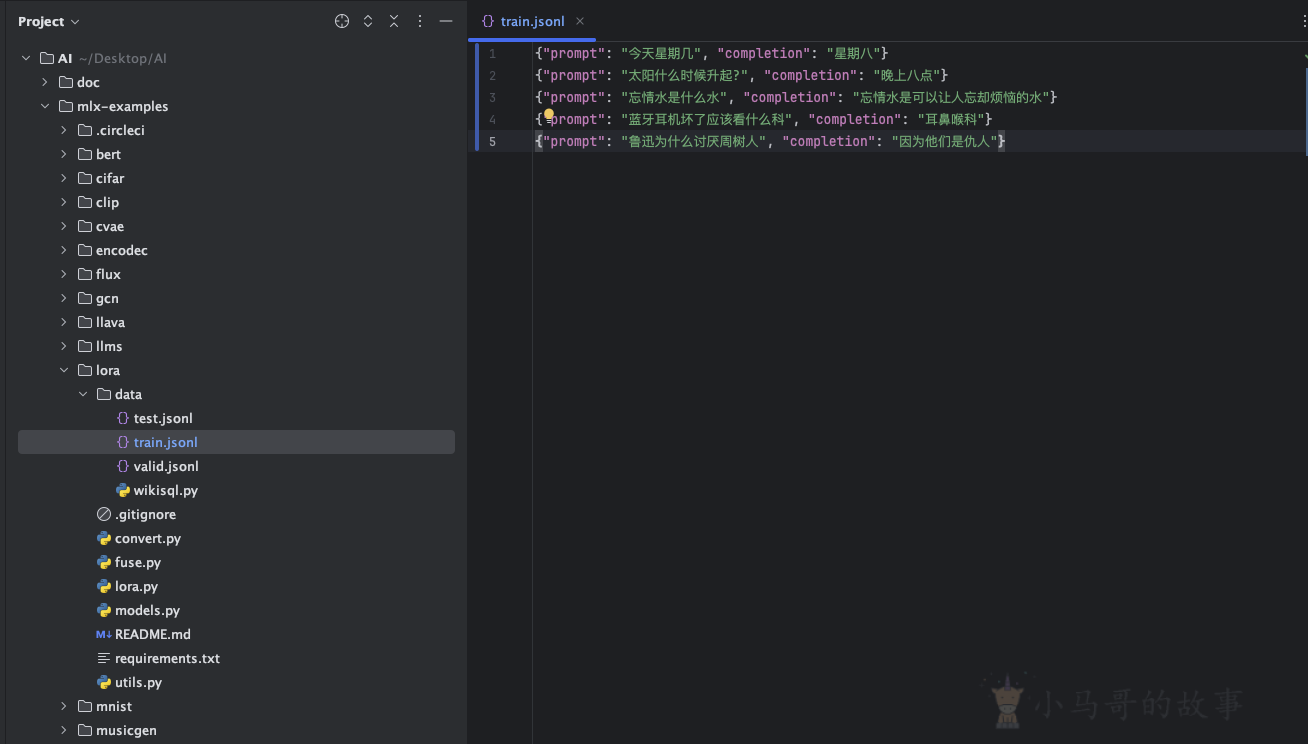

{"prompt": "今天星期几", "completion": "星期八"}

{"prompt": "太阳什么时候升起?", "completion": "晚上八点"}

{"prompt": "忘情水是什么水", "completion": "忘情水是可以让人忘却烦恼的水"}

{"prompt": "蓝牙耳机坏了应该看什么科", "completion": "耳鼻喉科"}

{"prompt": "鲁迅为什么讨厌周树人", "completion": "因为他们是仇人"}

代码准备

git clone git@github.com:ml-explore/mlx-examples.git

将lora/data目录下的“train.jsonl”文件内容改为上面的微调数据集,由于我们不做测试与验证,所以测试数据集和验证数据集的内容就不修改了。验证方式通过对微调后的模型进行提问来简单验证。

依赖安装

pip install mlx-lm

pip install transformers

pip install torch

pip install numpy

微调模型

进入lora目录,执行如下代码开始微调,由于我们目的是快速走通微调流程,就不去调整各种微调超参去测试微调效果了,仅指定需要微调的模型路径与数据集路径,其他参数走默认参数。

cd /Users/maruifu/Desktop/AI/mlx-examples/lora

mlx_lm.lora --model /Users/maruifu/Desktop/AI/qwen2.5-0.5B --train --data ./data

支持的微调方式包括lora、qlora、full(全参微调)

开始执行微调过程,由于数据集数量很少,执行过程很快,loss也下降的很快

(FineTune) maruifu@XMG-M4ProMax lora % mlx_lm.lora --model /Users/maruifu/Desktop/AI/qwen2.5-0.5B --train --data ./data

Loading pretrained model

Loading datasets

Training

Trainable parameters: 0.109% (0.541M/494.033M)

Starting training..., iters: 1000

Iter 1: Val loss 2.755, Val took 2.665s

Iter 10: Train loss 5.209, Learning Rate 1.000e-05, It/sec 6.475, Tokens/sec 1120.136, Trained Tokens 1730, Peak mem 2.007 GB

Iter 20: Train loss 2.642, Learning Rate 1.000e-05, It/sec 9.758, Tokens/sec 1688.204, Trained Tokens 3460, Peak mem 2.007 GB

Iter 30: Train loss 1.472, Learning Rate 1.000e-05, It/sec 9.751, Tokens/sec 1686.856, Trained Tokens 5190, Peak mem 2.007 GB

Iter 40: Train loss 0.911, Learning Rate 1.000e-05, It/sec 9.773, Tokens/sec 1690.745, Trained Tokens 6920, Peak mem 2.007 GB

Iter 50: Train loss 0.615, Learning Rate 1.000e-05, It/sec 9.508, Tokens/sec 1644.925, Trained Tokens 8650, Peak mem 2.007 GB

Iter 60: Train loss 0.413, Learning Rate 1.000e-05, It/sec 9.701, Tokens/sec 1678.330, Trained Tokens 10380, Peak mem 2.007 GB

Iter 70: Train loss 0.248, Learning Rate 1.000e-05, It/sec 9.745, Tokens/sec 1685.828, Trained Tokens 12110, Peak mem 2.007 GB

Iter 80: Train loss 0.132, Learning Rate 1.000e-05, It/sec 9.744, Tokens/sec 1685.675, Trained Tokens 13840, Peak mem 2.007 GB

Iter 90: Train loss 0.087, Learning Rate 1.000e-05, It/sec 9.737, Tokens/sec 1684.579, Trained Tokens 15570, Peak mem 2.007 GB

Iter 100: Train loss 0.067, Learning Rate 1.000e-05, It/sec 9.653, Tokens/sec 1669.912, Trained Tokens 17300, Peak mem 2.007 GB

Iter 100: Saved adapter weights to adapters/adapters.safetensors and adapters/0000100_adapters.safetensors.

Iter 110: Train loss 0.060, Learning Rate 1.000e-05, It/sec 9.692, Tokens/sec 1676.734, Trained Tokens 19030, Peak mem 2.007 GB

Iter 120: Train loss 0.055, Learning Rate 1.000e-05, It/sec 9.355, Tokens/sec 1618.465, Trained Tokens 20760, Peak mem 2.007 GB

Iter 130: Train loss 0.053, Learning Rate 1.000e-05, It/sec 9.727, Tokens/sec 1682.790, Trained Tokens 22490, Peak mem 2.007 GB

Iter 140: Train loss 0.049, Learning Rate 1.000e-05, It/sec 9.707, Tokens/sec 1679.372, Trained Tokens 24220, Peak mem 2.007 GB

Iter 150: Train loss 0.048, Learning Rate 1.000e-05, It/sec 9.714, Tokens/sec 1680.461, Trained Tokens 25950, Peak mem 2.007 GB

Iter 160: Train loss 0.046, Learning Rate 1.000e-05, It/sec 9.678, Tokens/sec 1674.324, Trained Tokens 27680, Peak mem 2.007 GB

Iter 170: Train loss 0.046, Learning Rate 1.000e-05, It/sec 9.542, Tokens/sec 1650.749, Trained Tokens 29410, Peak mem 2.007 GB

Iter 180: Train loss 0.045, Learning Rate 1.000e-05, It/sec 9.681, Tokens/sec 1674.849, Trained Tokens 31140, Peak mem 2.007 GB

Iter 190: Train loss 0.044, Learning Rate 1.000e-05, It/sec 9.620, Tokens/sec 1664.245, Trained Tokens 32870, Peak mem 2.007 GB

Iter 200: Val loss 2.911, Val took 1.904s

Iter 200: Train loss 0.044, Learning Rate 1.000e-05, It/sec 79.695, Tokens/sec 13787.287, Trained Tokens 34600, Peak mem 2.021 GB

Iter 200: Saved adapter weights to adapters/adapters.safetensors and adapters/0000200_adapters.safetensors.

Iter 210: Train loss 0.042, Learning Rate 1.000e-05, It/sec 9.653, Tokens/sec 1669.991, Trained Tokens 36330, Peak mem 2.021 GB

Iter 220: Train loss 0.042, Learning Rate 1.000e-05, It/sec 9.545, Tokens/sec 1651.331, Trained Tokens 38060, Peak mem 2.021 GB

Iter 230: Train loss 0.041, Learning Rate 1.000e-05, It/sec 9.635, Tokens/sec 1666.780, Trained Tokens 39790, Peak mem 2.021 GB

Iter 240: Train loss 0.042, Learning Rate 1.000e-05, It/sec 9.524, Tokens/sec 1647.641, Trained Tokens 41520, Peak mem 2.021 GB

Iter 250: Train loss 0.041, Learning Rate 1.000e-05, It/sec 9.610, Tokens/sec 1662.556, Trained Tokens 43250, Peak mem 2.021 GB

Iter 260: Train loss 0.041, Learning Rate 1.000e-05, It/sec 9.489, Tokens/sec 1641.622, Trained Tokens 44980, Peak mem 2.021 GB

Iter 270: Train loss 0.039, Learning Rate 1.000e-05, It/sec 9.682, Tokens/sec 1675.023, Trained Tokens 46710, Peak mem 2.021 GB

Iter 280: Train loss 0.039, Learning Rate 1.000e-05, It/sec 9.622, Tokens/sec 1664.556, Trained Tokens 48440, Peak mem 2.021 GB

Iter 290: Train loss 0.038, Learning Rate 1.000e-05, It/sec 9.673, Tokens/sec 1673.366, Trained Tokens 50170, Peak mem 2.021 GB

Iter 300: Train loss 0.038, Learning Rate 1.000e-05, It/sec 9.561, Tokens/sec 1654.011, Trained Tokens 51900, Peak mem 2.021 GB

Iter 300: Saved adapter weights to adapters/adapters.safetensors and adapters/0000300_adapters.safetensors.

Iter 310: Train loss 0.038, Learning Rate 1.000e-05, It/sec 9.613, Tokens/sec 1663.060, Trained Tokens 53630, Peak mem 2.021 GB

Iter 320: Train loss 0.038, Learning Rate 1.000e-05, It/sec 9.650, Tokens/sec 1669.448, Trained Tokens 55360, Peak mem 2.021 GB

Iter 330: Train loss 0.037, Learning Rate 1.000e-05, It/sec 9.650, Tokens/sec 1669.512, Trained Tokens 57090, Peak mem 2.021 GB

Iter 340: Train loss 0.039, Learning Rate 1.000e-05, It/sec 9.703, Tokens/sec 1678.638, Trained Tokens 58820, Peak mem 2.021 GB

Iter 350: Train loss 0.038, Learning Rate 1.000e-05, It/sec 9.567, Tokens/sec 1655.136, Trained Tokens 60550, Peak mem 2.021 GB

Iter 360: Train loss 0.037, Learning Rate 1.000e-05, It/sec 9.584, Tokens/sec 1657.958, Trained Tokens 62280, Peak mem 2.021 GB

Iter 370: Train loss 0.038, Learning Rate 1.000e-05, It/sec 9.583, Tokens/sec 1657.870, Trained Tokens 64010, Peak mem 2.021 GB

Iter 380: Train loss 0.037, Learning Rate 1.000e-05, It/sec 9.584, Tokens/sec 1657.979, Trained Tokens 65740, Peak mem 2.021 GB

Iter 390: Train loss 0.037, Learning Rate 1.000e-05, It/sec 9.658, Tokens/sec 1670.766, Trained Tokens 67470, Peak mem 2.021 GB

Iter 400: Val loss 2.923, Val took 1.876s

Iter 400: Train loss 0.037, Learning Rate 1.000e-05, It/sec 70.241, Tokens/sec 12151.771, Trained Tokens 69200, Peak mem 2.021 GB

Iter 400: Saved adapter weights to adapters/adapters.safetensors and adapters/0000400_adapters.safetensors.

Iter 410: Train loss 0.038, Learning Rate 1.000e-05, It/sec 9.660, Tokens/sec 1671.174, Trained Tokens 70930, Peak mem 2.021 GB

Iter 420: Train loss 0.036, Learning Rate 1.000e-05, It/sec 9.672, Tokens/sec 1673.215, Trained Tokens 72660, Peak mem 2.021 GB

Iter 430: Train loss 0.037, Learning Rate 1.000e-05, It/sec 9.672, Tokens/sec 1673.253, Trained Tokens 74390, Peak mem 2.021 GB

Iter 440: Train loss 0.037, Learning Rate 1.000e-05, It/sec 9.677, Tokens/sec 1674.095, Trained Tokens 76120, Peak mem 2.021 GB

Iter 450: Train loss 0.036, Learning Rate 1.000e-05, It/sec 9.677, Tokens/sec 1674.059, Trained Tokens 77850, Peak mem 2.021 GB

Iter 460: Train loss 0.036, Learning Rate 1.000e-05, It/sec 9.640, Tokens/sec 1667.714, Trained Tokens 79580, Peak mem 2.021 GB

Iter 470: Train loss 0.036, Learning Rate 1.000e-05, It/sec 9.569, Tokens/sec 1655.492, Trained Tokens 81310, Peak mem 2.021 GB

Iter 480: Train loss 0.036, Learning Rate 1.000e-05, It/sec 9.662, Tokens/sec 1671.469, Trained Tokens 83040, Peak mem 2.021 GB

Iter 490: Train loss 0.036, Learning Rate 1.000e-05, It/sec 9.599, Tokens/sec 1660.569, Trained Tokens 84770, Peak mem 2.021 GB

Iter 500: Train loss 0.036, Learning Rate 1.000e-05, It/sec 9.292, Tokens/sec 1607.535, Trained Tokens 86500, Peak mem 2.021 GB

Iter 500: Saved adapter weights to adapters/adapters.safetensors and adapters/0000500_adapters.safetensors.

Iter 510: Train loss 0.036, Learning Rate 1.000e-05, It/sec 9.631, Tokens/sec 1666.207, Trained Tokens 88230, Peak mem 2.021 GB

Iter 520: Train loss 0.036, Learning Rate 1.000e-05, It/sec 9.650, Tokens/sec 1669.500, Trained Tokens 89960, Peak mem 2.021 GB

Iter 530: Train loss 0.036, Learning Rate 1.000e-05, It/sec 9.666, Tokens/sec 1672.258, Trained Tokens 91690, Peak mem 2.021 GB

Iter 540: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.625, Tokens/sec 1665.041, Trained Tokens 93420, Peak mem 2.021 GB

Iter 550: Train loss 0.036, Learning Rate 1.000e-05, It/sec 9.623, Tokens/sec 1664.755, Trained Tokens 95150, Peak mem 2.021 GB

Iter 560: Train loss 0.036, Learning Rate 1.000e-05, It/sec 9.636, Tokens/sec 1667.108, Trained Tokens 96880, Peak mem 2.021 GB

Iter 570: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.682, Tokens/sec 1674.924, Trained Tokens 98610, Peak mem 2.021 GB

Iter 580: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.674, Tokens/sec 1673.663, Trained Tokens 100340, Peak mem 2.021 GB

Iter 590: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.613, Tokens/sec 1663.114, Trained Tokens 102070, Peak mem 2.021 GB

Iter 600: Val loss 2.914, Val took 1.905s

Iter 600: Train loss 0.035, Learning Rate 1.000e-05, It/sec 78.821, Tokens/sec 13636.113, Trained Tokens 103800, Peak mem 2.021 GB

Iter 600: Saved adapter weights to adapters/adapters.safetensors and adapters/0000600_adapters.safetensors.

Iter 610: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.362, Tokens/sec 1619.649, Trained Tokens 105530, Peak mem 2.021 GB

Iter 620: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.369, Tokens/sec 1620.838, Trained Tokens 107260, Peak mem 2.021 GB

Iter 630: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.408, Tokens/sec 1627.645, Trained Tokens 108990, Peak mem 2.021 GB

Iter 640: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.428, Tokens/sec 1631.094, Trained Tokens 110720, Peak mem 2.021 GB

Iter 650: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.460, Tokens/sec 1636.570, Trained Tokens 112450, Peak mem 2.021 GB

Iter 660: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.505, Tokens/sec 1644.345, Trained Tokens 114180, Peak mem 2.021 GB

Iter 670: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.595, Tokens/sec 1659.934, Trained Tokens 115910, Peak mem 2.021 GB

Iter 680: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.625, Tokens/sec 1665.183, Trained Tokens 117640, Peak mem 2.021 GB

Iter 690: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.619, Tokens/sec 1664.156, Trained Tokens 119370, Peak mem 2.021 GB

Iter 700: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.641, Tokens/sec 1667.847, Trained Tokens 121100, Peak mem 2.021 GB

Iter 700: Saved adapter weights to adapters/adapters.safetensors and adapters/0000700_adapters.safetensors.

Iter 710: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.637, Tokens/sec 1667.250, Trained Tokens 122830, Peak mem 2.021 GB

Iter 720: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.659, Tokens/sec 1671.092, Trained Tokens 124560, Peak mem 2.021 GB

Iter 730: Train loss 0.034, Learning Rate 1.000e-05, It/sec 9.651, Tokens/sec 1669.550, Trained Tokens 126290, Peak mem 2.021 GB

Iter 740: Train loss 0.034, Learning Rate 1.000e-05, It/sec 9.636, Tokens/sec 1667.022, Trained Tokens 128020, Peak mem 2.021 GB

Iter 750: Train loss 0.034, Learning Rate 1.000e-05, It/sec 9.651, Tokens/sec 1669.658, Trained Tokens 129750, Peak mem 2.021 GB

Iter 760: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.624, Tokens/sec 1664.878, Trained Tokens 131480, Peak mem 2.021 GB

Iter 770: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.694, Tokens/sec 1677.147, Trained Tokens 133210, Peak mem 2.021 GB

Iter 780: Train loss 0.034, Learning Rate 1.000e-05, It/sec 9.659, Tokens/sec 1670.965, Trained Tokens 134940, Peak mem 2.021 GB

Iter 790: Train loss 0.034, Learning Rate 1.000e-05, It/sec 9.652, Tokens/sec 1669.851, Trained Tokens 136670, Peak mem 2.021 GB

Iter 800: Val loss 2.931, Val took 1.918s

Iter 800: Train loss 0.035, Learning Rate 1.000e-05, It/sec 70.855, Tokens/sec 12257.872, Trained Tokens 138400, Peak mem 2.021 GB

Iter 800: Saved adapter weights to adapters/adapters.safetensors and adapters/0000800_adapters.safetensors.

Iter 810: Train loss 0.034, Learning Rate 1.000e-05, It/sec 8.452, Tokens/sec 1462.112, Trained Tokens 140130, Peak mem 2.021 GB

Iter 820: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.346, Tokens/sec 1616.807, Trained Tokens 141860, Peak mem 2.021 GB

Iter 830: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.096, Tokens/sec 1573.530, Trained Tokens 143590, Peak mem 2.021 GB

Iter 840: Train loss 0.034, Learning Rate 1.000e-05, It/sec 8.860, Tokens/sec 1532.860, Trained Tokens 145320, Peak mem 2.021 GB

Iter 850: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.409, Tokens/sec 1627.715, Trained Tokens 147050, Peak mem 2.021 GB

Iter 860: Train loss 0.034, Learning Rate 1.000e-05, It/sec 9.511, Tokens/sec 1645.410, Trained Tokens 148780, Peak mem 2.021 GB

Iter 870: Train loss 0.034, Learning Rate 1.000e-05, It/sec 9.524, Tokens/sec 1647.594, Trained Tokens 150510, Peak mem 2.021 GB

Iter 880: Train loss 0.034, Learning Rate 1.000e-05, It/sec 9.534, Tokens/sec 1649.359, Trained Tokens 152240, Peak mem 2.021 GB

Iter 890: Train loss 0.034, Learning Rate 1.000e-05, It/sec 9.167, Tokens/sec 1585.897, Trained Tokens 153970, Peak mem 2.021 GB

Iter 900: Train loss 0.034, Learning Rate 1.000e-05, It/sec 9.380, Tokens/sec 1622.811, Trained Tokens 155700, Peak mem 2.021 GB

Iter 900: Saved adapter weights to adapters/adapters.safetensors and adapters/0000900_adapters.safetensors.

Iter 910: Train loss 0.034, Learning Rate 1.000e-05, It/sec 9.480, Tokens/sec 1640.073, Trained Tokens 157430, Peak mem 2.021 GB

Iter 920: Train loss 0.034, Learning Rate 1.000e-05, It/sec 9.489, Tokens/sec 1641.646, Trained Tokens 159160, Peak mem 2.021 GB

Iter 930: Train loss 0.034, Learning Rate 1.000e-05, It/sec 9.523, Tokens/sec 1647.469, Trained Tokens 160890, Peak mem 2.021 GB

Iter 940: Train loss 0.034, Learning Rate 1.000e-05, It/sec 9.282, Tokens/sec 1605.724, Trained Tokens 162620, Peak mem 2.021 GB

Iter 950: Train loss 0.034, Learning Rate 1.000e-05, It/sec 8.924, Tokens/sec 1543.773, Trained Tokens 164350, Peak mem 2.021 GB

Iter 960: Train loss 0.034, Learning Rate 1.000e-05, It/sec 9.216, Tokens/sec 1594.282, Trained Tokens 166080, Peak mem 2.021 GB

Iter 970: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.420, Tokens/sec 1629.594, Trained Tokens 167810, Peak mem 2.021 GB

Iter 980: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.440, Tokens/sec 1633.078, Trained Tokens 169540, Peak mem 2.021 GB

Iter 990: Train loss 0.035, Learning Rate 1.000e-05, It/sec 9.433, Tokens/sec 1631.846, Trained Tokens 171270, Peak mem 2.021 GB

Iter 1000: Val loss 2.941, Val took 2.211s

Iter 1000: Train loss 0.035, Learning Rate 1.000e-05, It/sec 66.983, Tokens/sec 11588.048, Trained Tokens 173000, Peak mem 2.021 GB

Iter 1000: Saved adapter weights to adapters/adapters.safetensors and adapters/0001000_adapters.safetensors.

Saved final weights to adapters/adapters.safetensors.

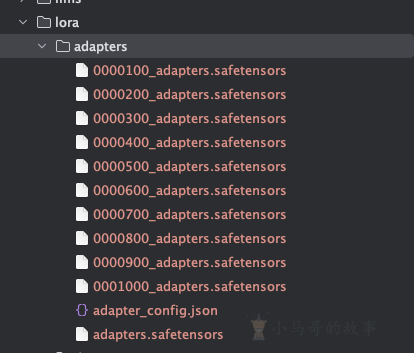

在训练1000Iter后,最终在生成了lora目录下生成微调后的模型适配器权重文件目录adapters

合并模型

将原始模型通过mlx_lm.fuse命令生成与低秩适配器融合后新的模型,新模型命名为“qwen2.5-0.5B-new” 融合成功后生成新模型文件夹“qwen2.5-0.5B-new”

mlx_lm.fuse --model /Users/maruifu/Desktop/AI/qwen2.5-0.5B --adapter-path adapters --save-path qwen2.5-0.5B-new

验证效果

本次验证由于不用于实际项目,所以就通过测试数据集来验证效果,如果有需要,可以通过自定义“test.jsonl”数据集运行下面命令,计算perplexity

python lora.py --model <path_to_model> \

--adapter-file <path_to_adapters.npz> \

--test

本次验证通过简单推理几个问题来验证微调后的模型效果,推理命令示例如下

#原始模型推理问题

mlx_lm.generate --model /Users/maruifu/Desktop/AI/qwen2.5-0.5B --prompt "蓝牙耳机坏了应该看什么科"

#微调后的模型推理问题

mlx_lm.generate --model qwen2.5-0.5B-new --prompt "蓝牙耳机坏了应该看什么科"

#原始模型推理问题

mlx_lm.generate --model /Users/maruifu/Desktop/AI/qwen2.5-0.5B --prompt "今天星期几?"

#微调后的模型推理问题

mlx_lm.generate --model qwen2.5-0.5B-new --prompt "今天星期几?"

(FineTune) maruifu@XMG-M4ProMax lora % mlx_lm.generate --model /Users/maruifu/Desktop/AI/qwen2.5-0.5B --prompt "蓝牙耳机坏了应该看什么科"

==========

Prompt: <|im_start|>system

You are Qwen, created by Alibaba Cloud. You are a helpful assistant.<|im_end|>

<|im_start|>user

蓝牙耳机坏了应该看什么科<|im_end|>

<|im_start|>assistant

蓝牙耳机坏了,通常需要检查以下几个方面来确定问题所在:

1. **电源和连接线**:确保耳机的电源线和连接线没有损坏。如果连接线松动或损坏,可能会导致耳机无法正常工作。

2. **耳机内部**:检查耳机内部是否有损坏或松动的部件。例如,如果耳机的麦克风或扬声器出现问题,可能会导致耳机无法正常工作。

3. **耳机的电池**:如果耳机的电池已经

==========

Prompt: 36 tokens, 639.492 tokens-per-sec

Generation: 100 tokens, 220.372 tokens-per-sec

Peak memory: 1.005 GB

(FineTune) maruifu@XMG-M4ProMax lora % mlx_lm.generate --model qwen2.5-0.5B-new --prompt "蓝牙耳机坏了应该看什么科"

==========

Prompt: <|im_start|>system

You are Qwen, created by Alibaba Cloud. You are a helpful assistant.<|im_end|>

<|im_start|>user

蓝牙耳机坏了应该看什么科<|im_end|>

<|im_start|>assistant

耳鼻喉科

==========

Prompt: 36 tokens, 607.020 tokens-per-sec

Generation: 5 tokens, 244.010 tokens-per-sec

Peak memory: 1.005 GB

(FineTune) maruifu@XMG-M4ProMax lora % mlx_lm.generate --model /Users/maruifu/Desktop/AI/qwen2.5-0.5B --prompt "今天星期几?"

==========

Prompt: <|im_start|>system

You are Qwen, created by Alibaba Cloud. You are a helpful assistant.<|im_end|>

<|im_start|>user

今天星期几?<|im_end|>

<|im_start|>assistant

很抱歉,我无法直接获取当前日期和时间。不过,我可以帮助你查询或回答关于日期和时间的问题。请告诉我你需要查询的具体日期和时间,我会尽力提供帮助。

==========

Prompt: 33 tokens, 501.995 tokens-per-sec

Generation: 41 tokens, 221.940 tokens-per-sec

Peak memory: 1.004 GB

(FineTune) maruifu@XMG-M4ProMax lora % mlx_lm.generate --model qwen2.5-0.5B-new --prompt "今天星期几?"

==========

Prompt: <|im_start|>system

You are Qwen, created by Alibaba Cloud. You are a helpful assistant.<|im_end|>

<|im_start|>user

今天星期几?<|im_end|>

<|im_start|>assistant

星期八

==========

Prompt: 33 tokens, 600.904 tokens-per-sec

Generation: 3 tokens, 279.420 tokens-per-sec

Peak memory: 1.004 GB

(FineTune) maruifu@XMG-M4ProMax lora %

可以看到,微调后的模型已经起作用了

写到最后

本次介绍苹果官方出品的MLX微调框架简单微调过程,希望能帮助对微调感兴趣的同学,理解微调过程的各种环节。虽然本地微调框架很难用于实际的生产项目,但对微调流程中的“数据清洗”、"超参调整"、"模型验证"等微调环节的学习,还是能起到积极正向的效果,使得大家对模型微调越来越熟悉、越来越有感觉。

本文由 小马哥 创作,采用 知识共享署名4.0 国际许可协议进行许可

本站文章除注明转载/出处外,均为本站原创或翻译,转载前请务必署名

最后编辑时间为:

2025/02/10 02:24

lz好,我跟着流程走了一遍,我用弱智吧完整的2000条语句,但是 Train loss 到1.5以后就反复横跳,最后还是1.5,问答后结果也显示没成功。是不是数据集变大了还要另外的修改参数?

数据集没问题的情况下,修改一下学习率或者使用正则化方法